Stanford and Google DeepMind researchers have created AI that may replicate human personalities with uncanny accuracy after only a two-hour dialog.

By interviewing 1,052 individuals from numerous backgrounds, they constructed what they name “simulation brokers” – digital copies that had been spookily efficient at predicting their human counterparts’ beliefs, attitudes, and behaviors.

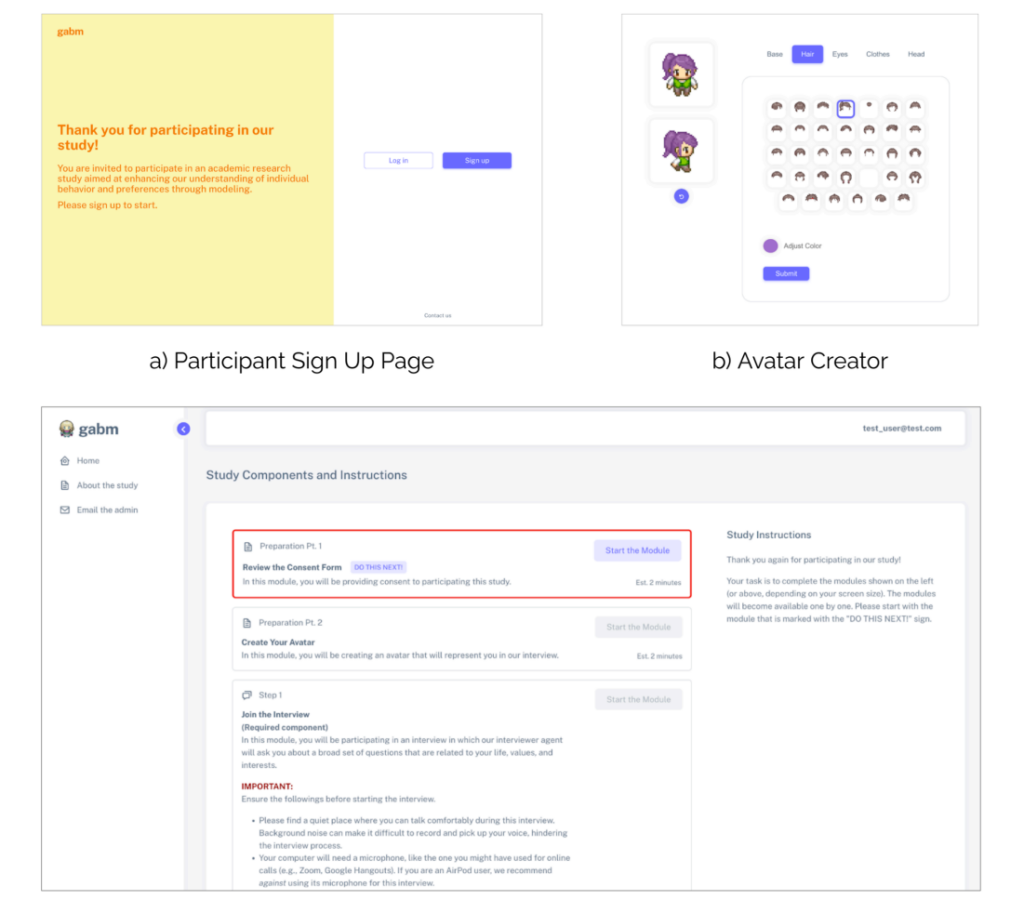

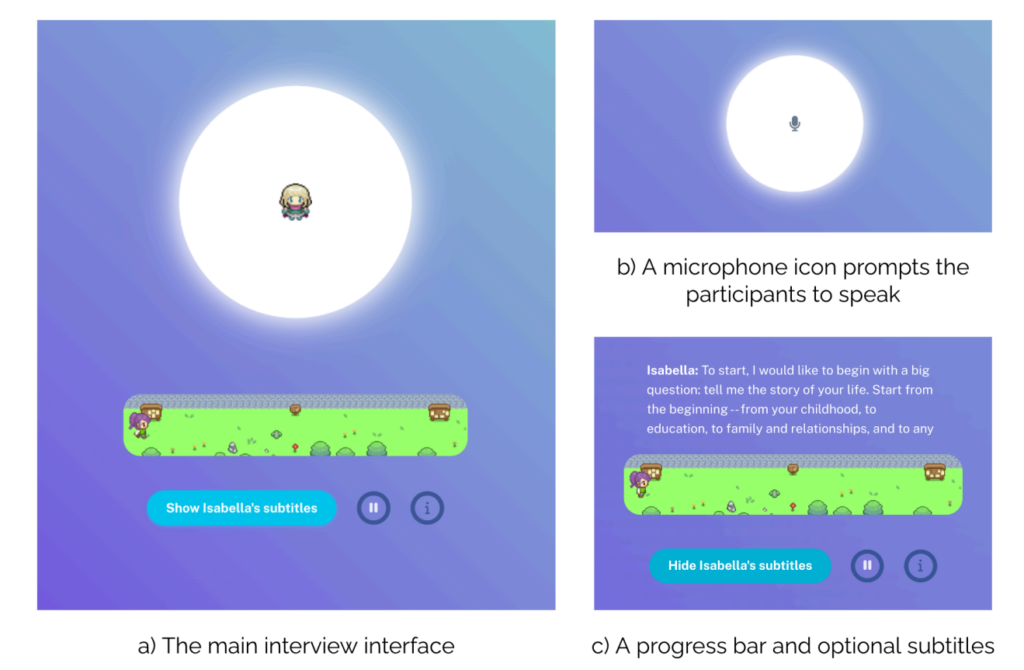

To create the digital copies, the workforce makes use of information from an “AI interviewer” designed to interact contributors in pure dialog.

The AI interviewer asks questions and generates personalised follow-up questions – a mean of 82 per session – exploring every thing from childhood recollections to political beliefs.

By way of these two-hour discussions, every participant generated detailed transcripts averaging 6,500 phrases.

For instance, when a participant mentions their childhood hometown, the AI may probe deeper, asking about particular recollections or experiences. By simulating a pure circulate of dialog, the system captures nuanced private data that normal surveys are inclined to skim over.

Behind the scenes, the examine paperwork what the researchers name “knowledgeable reflection” – prompting massive language fashions (LLMs) to research every dialog from 4 distinct skilled viewpoints:

- As a psychologist, it identifies particular persona traits and emotional patterns – as an illustration, noting how somebody values independence primarily based on their descriptions of household relationships.

- By way of a behavioral economist’s lens, it extracts insights about monetary decision-making and threat tolerance, like how they method financial savings or profession decisions.

- The political scientist perspective maps ideological leanings and coverage preferences throughout numerous points.

- A demographic evaluation captures socioeconomic elements and life circumstances.

The researchers concluded that this interview-based method outperformed comparable strategies – similar to mining social media information – by a considerable margin.

Testing the digital copies

So how good had been the AI copies? The researchers put them by means of a battery of exams to seek out out.

First, they used the Common Social Survey – a measure of social attitudes that asks questions on every thing from political beliefs to non secular beliefs. Right here, the AI copies matched their human counterparts’ responses 85% of the time.

On the Huge 5 persona take a look at, which measures traits like openness and conscientiousness by means of 44 totally different questions, the AI predictions aligned with human responses about 80% of the time. The system was excellent at capturing traits like extraversion and neuroticism.

Financial sport testing revealed fascinating limitations, nonetheless. Within the “Dictator Recreation,” the place contributors determine how you can break up cash with others, the AI struggled to completely predict human generosity.

Within the “Belief Recreation,” which exams willingness to cooperate with others for mutual profit, the digital copies solely matched human decisions about two-thirds of the time.

This implies that whereas AI can grasp our said values, it nonetheless can’t absolutely seize the nuances of human social decision-making (but, in fact).

Actual-world experiments

Not stopping there, the researchers additionally topic the copies to 5 traditional social psychology experiments.

In a single experiment testing how perceived intent impacts blame, each people and their AI copies confirmed comparable patterns of assigning extra blame when dangerous actions appeared intentional.

One other experiment examined how equity influences emotional responses, with AI copies precisely predicting human reactions to honest versus unfair remedy.

The AI replicas efficiently reproduced human conduct in 4 out of 5 experiments, suggesting they’ll mannequin not simply particular person topical responses however broad, advanced behavioral patterns.

Straightforward AI clones: What are the implications?

AI methods that ‘clone’ human views and behaviors are huge enterprise, with Meta not too long ago asserting plans to fill Fb and Instagram with AI profiles that may create content material and have interaction with customers.

TikTok has additionally jumped into the fray with its new “Symphony” suite of AI-powered inventive instruments, which incorporates digital avatars that can be utilized by manufacturers and creators to provide localized content material at scale.

With Symphony Digital Avatars, TikTok permits eligible creators to construct avatars that characterize actual individuals, full with a variety of gestures, expressions, ages, nationalities and languages.

Stanford and DeepMind’s analysis suggests such digital replicas will develop into way more subtle – and simpler to construct and deploy at scale.

“For those who can have a bunch of small ‘yous’ working round and really making the choices that you’d have made — that, I believe, is finally the longer term,” lead researcher Joon Sung Park, a Stanford PhD scholar in laptop science, describes to MIT.

Park describes that there are upsides to such know-how, as constructing correct clones might help scientific analysis.

As an alternative of working costly or ethically questionable experiments on actual individuals, researchers might take a look at how populations may reply to sure inputs. For instance, it might assist predict reactions to public well being messages or examine how communities adapt to main societal shifts.

In the end, although, the identical options that make these AI replicas beneficial for analysis additionally make them highly effective instruments for deception.

As digital copies develop into extra convincing, distinguishing genuine human interplay from AI has develop into robust, as we’ve noticed from an onslaught of deep fakes.

What if such know-how was used to clone somebody in opposition to their will? What are the implications of making digital copies which might be intently modeled on actual individuals?

The Stanford and DeepMind analysis workforce acknowledges these dangers. Their framework requires clear consent from contributors and permits them to withdraw their information, treating persona replication with the identical privateness issues as delicate medical data.

That a minimum of gives some theoretical safety in opposition to extra malicious types of misuse. However, in any case, we’re pushing deeper into the uncharted territories of human-machine interplay, and the long-term implications stay largely unknown.